“Unveiling the Underdogs: Challenging Nvidia’s Reign in AI Innovation”

Introduction

Nvidia has long been a dominant force in the realm of artificial intelligence, primarily due to its powerful graphics processing units (GPUs) that have become the backbone of AI computation. However, as the AI landscape evolves, new challenges to Nvidia’s supremacy are emerging, often overlooked amidst the company’s continued success. These challenges stem from a combination of technological advancements, competitive innovations, and shifting market dynamics. Companies are increasingly exploring alternative hardware solutions, such as custom AI chips and field-programmable gate arrays (FPGAs), which promise greater efficiency and specialization for specific AI tasks. Additionally, the rise of open-source AI frameworks and the democratization of AI tools are enabling smaller players to innovate and compete. As the demand for AI capabilities grows across industries, Nvidia must navigate these challenges to maintain its leadership position in an increasingly competitive and diverse market.

Emerging Competitors in AI Hardware

Nvidia has long been a dominant force in the realm of artificial intelligence hardware, particularly with its powerful graphics processing units (GPUs) that have become the backbone of AI research and development. However, as the AI landscape continues to evolve, a new wave of competitors is emerging, challenging Nvidia’s supremacy in this critical sector. These emerging competitors are not only innovating in terms of technology but are also strategically positioning themselves to capture a significant share of the AI hardware market.

One of the key players in this burgeoning field is AMD, which has been making significant strides with its Radeon Instinct line of GPUs. AMD’s focus on open-source software and its commitment to providing high-performance computing solutions have allowed it to gain traction among AI researchers and developers. By leveraging its expertise in chip design and manufacturing, AMD is poised to offer a viable alternative to Nvidia’s offerings, particularly in terms of cost-effectiveness and energy efficiency. This strategic positioning could potentially disrupt Nvidia’s stronghold, especially as organizations seek more sustainable and budget-friendly solutions.

In addition to AMD, several startups are also entering the fray, bringing with them innovative approaches to AI hardware. Companies like Graphcore and Cerebras Systems are developing specialized AI processors that promise to deliver unprecedented performance levels. Graphcore’s Intelligence Processing Unit (IPU) and Cerebras’ Wafer-Scale Engine (WSE) are designed specifically for AI workloads, offering unique architectures that can handle the complex computations required by modern AI models. These startups are not only challenging Nvidia’s technological dominance but are also attracting significant investment, indicating a growing confidence in their potential to reshape the AI hardware landscape.

Moreover, tech giants such as Google and Amazon are also making inroads into the AI hardware market. Google’s Tensor Processing Units (TPUs) and Amazon’s custom AI chips for AWS are examples of how these companies are leveraging their vast resources and expertise to develop proprietary hardware solutions. By integrating these chips into their cloud services, Google and Amazon are not only enhancing their AI capabilities but are also providing customers with powerful tools to accelerate their AI initiatives. This move towards vertical integration could pose a significant challenge to Nvidia, as it underscores a shift in how AI hardware is being developed and deployed.

Furthermore, the rise of open-source hardware initiatives is another factor that could impact Nvidia’s position. Projects like RISC-V are democratizing access to cutting-edge chip designs, enabling a broader range of companies to develop custom AI hardware. This democratization could lead to increased competition and innovation, as more players enter the market with diverse and specialized solutions. As a result, Nvidia may need to adapt its strategies to maintain its competitive edge in an increasingly crowded and dynamic field.

In conclusion, while Nvidia remains a formidable leader in AI hardware, the landscape is rapidly changing with the emergence of new competitors. Companies like AMD, innovative startups, tech giants, and open-source initiatives are all contributing to a more competitive environment. As these players continue to innovate and expand their offerings, Nvidia will need to navigate this evolving landscape carefully to sustain its leadership position. The future of AI hardware is undoubtedly exciting, with the potential for groundbreaking advancements that could redefine the industry.

The Role of Open-Source AI Models

Nvidia has long been a dominant force in the realm of artificial intelligence, primarily due to its powerful graphics processing units (GPUs) that have become the backbone of AI computation. However, an often-overlooked challenge to Nvidia’s supremacy in the AI sector is the burgeoning development and adoption of open-source AI models. These models, which are freely available for anyone to use, modify, and distribute, are increasingly playing a pivotal role in democratizing AI technology and fostering innovation across various industries.

Open-source AI models offer several advantages that are gradually reshaping the landscape of AI development. Firstly, they provide accessibility to cutting-edge technology without the prohibitive costs associated with proprietary software and hardware. This accessibility is particularly beneficial for startups, academic institutions, and individual developers who may lack the financial resources to invest in expensive AI infrastructure. By lowering the barrier to entry, open-source models enable a broader range of participants to contribute to AI research and application, thereby accelerating the pace of innovation.

Moreover, the collaborative nature of open-source projects fosters a community-driven approach to problem-solving. Developers from around the world can contribute to the improvement and refinement of these models, leading to rapid iterations and enhancements. This collective effort often results in more robust and versatile AI solutions that can be adapted to a wide array of applications. In contrast, proprietary models may be limited by the resources and strategic priorities of a single company, potentially stifling innovation.

In addition to fostering innovation, open-source AI models also promote transparency and trust. Users can inspect the underlying code, understand how decisions are made, and identify potential biases or errors. This transparency is crucial in an era where AI systems are increasingly being used in sensitive areas such as healthcare, finance, and criminal justice. By allowing for independent audits and verifications, open-source models help ensure that AI systems are fair, accountable, and aligned with ethical standards.

Despite these advantages, the rise of open-source AI models does not necessarily spell the end for Nvidia’s dominance. In fact, Nvidia has recognized the potential of open-source initiatives and has actively engaged with the community. The company has released its own open-source software libraries and tools, such as CUDA and cuDNN, which are widely used to optimize AI workloads on Nvidia hardware. By supporting open-source development, Nvidia not only strengthens its ecosystem but also ensures that its hardware remains a preferred choice for running these models.

Furthermore, while open-source models provide the software foundation, the demand for high-performance hardware to train and deploy these models continues to grow. Nvidia’s GPUs, known for their exceptional processing power and efficiency, remain a critical component in the AI infrastructure. As AI models become more complex and data-intensive, the need for powerful hardware solutions is likely to persist, ensuring that Nvidia maintains a significant role in the AI landscape.

In conclusion, while open-source AI models present a formidable challenge to Nvidia’s AI supremacy, they also offer opportunities for collaboration and growth. By embracing the open-source movement and continuing to innovate in hardware development, Nvidia can maintain its leadership position while contributing to the broader advancement of AI technology. As the AI ecosystem evolves, the interplay between open-source models and proprietary hardware will be crucial in shaping the future of artificial intelligence.

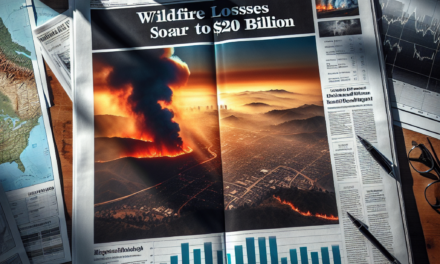

Impact of Global Semiconductor Shortages

The global semiconductor shortage has emerged as a formidable challenge to Nvidia’s dominance in the artificial intelligence (AI) sector, a domain where the company has long been considered a leader. This shortage, which has affected industries worldwide, is not merely a temporary disruption but a significant hurdle that could reshape the competitive landscape of AI technology. As the demand for AI-driven solutions continues to surge, Nvidia’s ability to maintain its supremacy is increasingly being tested by the constraints imposed by limited semiconductor availability.

To understand the impact of this shortage on Nvidia, it is essential to consider the company’s reliance on advanced semiconductor technology to power its AI hardware. Nvidia’s graphics processing units (GPUs) are at the heart of many AI applications, from data centers to autonomous vehicles. These GPUs require cutting-edge semiconductor components to deliver the high performance and efficiency that Nvidia’s customers expect. However, the global shortage has led to a bottleneck in the supply chain, making it challenging for Nvidia to meet the growing demand for its products.

Moreover, the semiconductor shortage has intensified competition among tech companies, all vying for a limited supply of chips. This competition has forced Nvidia to navigate a complex landscape where securing semiconductor components is not only about financial capability but also about strategic partnerships and long-term planning. As a result, Nvidia has had to rethink its supply chain strategies, seeking to diversify its sources and invest in securing future supplies. This shift in strategy is crucial, as any disruption in the supply chain could have significant repercussions on Nvidia’s ability to deliver its AI solutions.

In addition to supply chain challenges, the semiconductor shortage has also spurred innovation among Nvidia’s competitors. Companies that were previously overshadowed by Nvidia’s technological prowess are now exploring alternative approaches to AI hardware, seeking to capitalize on the gaps left by the shortage. For instance, some firms are investing in the development of custom AI chips that do not rely on the same semiconductor components as Nvidia’s GPUs. This innovation could potentially lead to breakthroughs that challenge Nvidia’s market position, offering customers new options that are less dependent on the constrained semiconductor supply.

Furthermore, the shortage has highlighted the importance of geopolitical factors in the semiconductor industry. With key semiconductor manufacturing facilities concentrated in specific regions, geopolitical tensions can exacerbate supply chain disruptions. Nvidia, like many other tech companies, must navigate these geopolitical dynamics carefully to ensure a stable supply of semiconductors. This involves not only maintaining good relations with manufacturing partners but also advocating for policies that support the growth and resilience of the semiconductor industry.

In conclusion, while Nvidia remains a dominant force in the AI sector, the global semiconductor shortage presents a significant challenge that could alter the competitive dynamics of the industry. The shortage has forced Nvidia to adapt its supply chain strategies, contend with increased competition, and navigate complex geopolitical landscapes. As the company strives to maintain its AI supremacy, it must continue to innovate and collaborate with partners across the semiconductor ecosystem. Ultimately, the ability to overcome these challenges will determine Nvidia’s future role in the rapidly evolving world of artificial intelligence.

Regulatory Challenges in AI Development

Nvidia has long been a dominant force in the realm of artificial intelligence, particularly due to its cutting-edge graphics processing units (GPUs) that power a vast array of AI applications. However, while much attention is given to technological advancements and market competition, an often-overlooked challenge to Nvidia’s AI supremacy lies in the regulatory landscape governing AI development. As AI technologies continue to evolve at a rapid pace, regulatory frameworks are struggling to keep up, posing significant implications for companies like Nvidia that are at the forefront of this technological revolution.

To begin with, the regulatory environment for AI is characterized by a lack of uniformity across different jurisdictions. This inconsistency creates a complex web of compliance requirements that companies must navigate. For Nvidia, which operates on a global scale, this means adapting to a myriad of regulations that vary not only from country to country but also within regions of the same nation. Such regulatory fragmentation can lead to increased operational costs and complicate the deployment of AI technologies across borders. Moreover, it can stifle innovation by creating uncertainty about which standards will ultimately prevail.

In addition to the challenges posed by regulatory fragmentation, there is also the issue of rapidly evolving regulations. As governments and international bodies strive to address the ethical and societal implications of AI, new rules and guidelines are being introduced at an unprecedented pace. For Nvidia, staying ahead of these changes requires significant investment in compliance and legal expertise. This is particularly crucial as regulations increasingly focus on areas such as data privacy, algorithmic transparency, and bias mitigation. Companies must ensure that their AI systems not only comply with existing laws but are also adaptable to future regulatory shifts.

Furthermore, the regulatory scrutiny on AI is intensifying as concerns about its potential risks grow. Issues such as job displacement, surveillance, and decision-making biases have prompted calls for stricter oversight. Nvidia, as a leader in AI hardware, finds itself at the center of these discussions. The company must demonstrate that its technologies are not only powerful but also safe and ethical. This involves engaging with policymakers, participating in public discourse, and contributing to the development of industry standards. By doing so, Nvidia can help shape the regulatory environment in a way that balances innovation with societal needs.

Another layer of complexity arises from the geopolitical dimensions of AI regulation. As countries vie for technological supremacy, AI has become a focal point of international competition. This has led to divergent regulatory approaches, with some nations adopting more stringent measures while others prioritize innovation. For Nvidia, navigating these geopolitical tensions requires a nuanced understanding of global dynamics and strategic partnerships. The company must balance its business interests with the need to comply with varying national priorities and regulations.

In conclusion, while Nvidia’s technological prowess and market position are undeniable, the regulatory challenges it faces in AI development are significant and multifaceted. The company must adeptly navigate a fragmented and rapidly evolving regulatory landscape, address growing concerns about the societal impact of AI, and manage geopolitical complexities. By proactively engaging with these challenges, Nvidia can not only maintain its leadership in AI but also contribute to the responsible and sustainable development of this transformative technology. As the regulatory environment continues to evolve, Nvidia’s ability to adapt and influence will be crucial in shaping the future of AI.

The Rise of Custom AI Chips

In recent years, Nvidia has emerged as a dominant force in the realm of artificial intelligence (AI), largely due to its powerful graphics processing units (GPUs) that have become the backbone of AI computations. However, as the demand for AI applications continues to surge, a new challenge to Nvidia’s supremacy is quietly gaining momentum: the rise of custom AI chips. These specialized processors are designed to optimize specific AI tasks, offering a compelling alternative to the general-purpose GPUs that Nvidia is known for.

The development of custom AI chips is driven by the need for more efficient and cost-effective solutions tailored to specific applications. Unlike traditional GPUs, which are designed to handle a wide range of tasks, custom AI chips are engineered to excel in particular areas, such as natural language processing, image recognition, or autonomous driving. This specialization allows them to deliver superior performance and energy efficiency, which are critical factors in the deployment of AI technologies at scale.

One of the key players in this burgeoning field is Google, with its Tensor Processing Units (TPUs). These chips are specifically designed to accelerate machine learning workloads and have been instrumental in powering Google’s own AI-driven services. By focusing on the unique requirements of machine learning, TPUs can achieve higher throughput and lower latency compared to general-purpose GPUs. This has enabled Google to enhance the performance of its AI applications while reducing operational costs, setting a precedent for other tech giants to follow.

Similarly, companies like Apple and Amazon are investing heavily in the development of their own custom AI chips. Apple’s A-series chips, for instance, incorporate a neural engine that is optimized for AI tasks, enabling features like facial recognition and augmented reality on its devices. Amazon, on the other hand, has developed the Inferentia chip, which is designed to accelerate machine learning inference in its cloud services. These initiatives highlight a growing trend among major technology companies to develop in-house solutions that cater to their specific AI needs.

Moreover, the rise of custom AI chips is not limited to tech giants. Startups and smaller companies are also entering the fray, driven by the potential to disrupt established markets with innovative solutions. Companies like Graphcore and Cerebras Systems are developing chips that promise to revolutionize AI processing by offering unprecedented levels of performance and efficiency. These new entrants are challenging the status quo and pushing the boundaries of what is possible in AI hardware.

As the landscape of AI hardware continues to evolve, the implications for Nvidia are significant. While the company remains a leader in the GPU market, the growing popularity of custom AI chips presents a formidable challenge. To maintain its competitive edge, Nvidia must adapt to this changing environment by either developing its own specialized chips or enhancing the capabilities of its existing products to better meet the demands of specific AI applications.

In conclusion, the rise of custom AI chips represents a pivotal shift in the AI industry, offering a viable alternative to Nvidia’s GPU dominance. As more companies recognize the benefits of tailored solutions, the competition in the AI hardware market is set to intensify. This evolution not only promises to drive innovation but also to redefine the future of AI technology, as companies strive to deliver more efficient, powerful, and cost-effective solutions.

Energy Efficiency Concerns in AI Processing

Nvidia has long been at the forefront of artificial intelligence (AI) processing, with its graphics processing units (GPUs) serving as the backbone for many AI applications. However, as the demand for AI continues to grow, so too does the need for energy-efficient processing solutions. This presents a significant challenge to Nvidia’s dominance in the AI sector, as energy efficiency becomes an increasingly critical factor in the development and deployment of AI technologies.

The rapid advancement of AI has led to a surge in computational requirements, with models becoming more complex and data-intensive. Consequently, the energy consumption associated with training and deploying these models has skyrocketed. This has raised concerns about the environmental impact of AI technologies, as well as the economic costs associated with their energy use. As a result, there is a growing emphasis on developing AI systems that are not only powerful but also energy-efficient.

Nvidia’s GPUs have been instrumental in driving AI innovation, offering unparalleled processing power that has enabled breakthroughs in machine learning and deep learning. However, these GPUs are also known for their high energy consumption, which can be a limiting factor in their widespread adoption. As AI applications become more ubiquitous, the need for energy-efficient solutions becomes more pressing, prompting researchers and companies to explore alternative approaches.

One such approach is the development of specialized AI chips, known as application-specific integrated circuits (ASICs), which are designed to perform specific tasks with greater efficiency than general-purpose GPUs. These chips can offer significant energy savings, making them an attractive option for companies looking to reduce their carbon footprint and operational costs. Moreover, the rise of edge computing, where AI processing is performed closer to the data source rather than in centralized data centers, further underscores the importance of energy-efficient AI solutions. Edge devices often have limited power resources, necessitating the use of low-power AI chips that can deliver high performance without draining energy reserves.

In addition to hardware innovations, software optimization plays a crucial role in enhancing the energy efficiency of AI systems. Techniques such as model compression, quantization, and pruning can significantly reduce the computational requirements of AI models, thereby lowering their energy consumption. These methods involve simplifying models without sacrificing accuracy, allowing them to run more efficiently on existing hardware.

Furthermore, the integration of renewable energy sources into data centers and AI infrastructure is another strategy being explored to mitigate the environmental impact of AI processing. By harnessing solar, wind, and other renewable energies, companies can offset the energy demands of their AI operations, contributing to a more sustainable future.

While Nvidia continues to lead in AI processing power, the growing emphasis on energy efficiency presents a formidable challenge that cannot be overlooked. As the industry evolves, Nvidia will need to adapt by investing in energy-efficient technologies and strategies to maintain its competitive edge. This may involve developing new hardware solutions, optimizing existing software, and embracing sustainable energy practices.

In conclusion, the quest for energy-efficient AI processing is reshaping the landscape of AI technology, presenting both challenges and opportunities for industry leaders like Nvidia. As the demand for AI continues to rise, the ability to balance performance with energy efficiency will be crucial in determining the future trajectory of AI development and deployment.

Strategic Partnerships and Alliances in AI Industry

Nvidia has long been recognized as a dominant force in the artificial intelligence (AI) industry, primarily due to its cutting-edge graphics processing units (GPUs) that power a vast array of AI applications. However, an often-overlooked challenge to Nvidia’s supremacy in this rapidly evolving field is the strategic partnerships and alliances forming among other tech giants and emerging companies. These collaborations are reshaping the competitive landscape, offering alternative solutions that could potentially rival Nvidia’s offerings.

To begin with, the AI industry is characterized by its rapid pace of innovation and the necessity for companies to adapt quickly to new technological advancements. In this context, strategic partnerships have become a crucial element for companies seeking to enhance their capabilities and expand their market reach. For instance, collaborations between hardware manufacturers and software developers can lead to the creation of more integrated and efficient AI solutions. This synergy allows companies to leverage each other’s strengths, thereby accelerating the development of new technologies and reducing time-to-market.

Moreover, alliances between tech giants and startups are particularly noteworthy. Established companies often possess the resources and market presence that startups lack, while startups bring fresh ideas and innovative approaches to the table. By joining forces, these entities can create a powerful combination that challenges the status quo. For example, partnerships between cloud service providers and AI-focused startups have resulted in the development of specialized AI platforms that offer enhanced performance and scalability. These platforms provide an attractive alternative to Nvidia’s offerings, especially for businesses seeking cost-effective and flexible solutions.

In addition to these collaborations, the open-source movement is playing a significant role in shaping the AI industry. Open-source AI frameworks and tools have democratized access to advanced technologies, enabling a wider range of companies to participate in AI development. This has led to the emergence of new players who can compete with established giants like Nvidia. By contributing to and benefiting from open-source projects, companies can build upon existing technologies and create innovative solutions that cater to specific market needs.

Furthermore, the increasing importance of data in AI development cannot be overlooked. Strategic partnerships that focus on data sharing and collaboration are becoming more prevalent, as companies recognize the value of diverse and high-quality datasets. These alliances enable organizations to pool their resources and expertise, resulting in more robust AI models and applications. As a result, companies that can effectively harness the power of data through strategic partnerships may pose a significant challenge to Nvidia’s dominance.

It is also important to consider the role of government and academic institutions in fostering strategic partnerships within the AI industry. Public-private partnerships and collaborations with research institutions can drive innovation and facilitate the development of cutting-edge technologies. By working together, these entities can address complex challenges and push the boundaries of what is possible in AI. Such collaborations can lead to breakthroughs that disrupt the market and offer viable alternatives to Nvidia’s solutions.

In conclusion, while Nvidia remains a formidable player in the AI industry, the strategic partnerships and alliances forming among other companies present a significant challenge to its supremacy. By leveraging each other’s strengths, these collaborations are driving innovation and creating new opportunities in the AI landscape. As the industry continues to evolve, it will be essential for Nvidia to adapt and engage in strategic partnerships of its own to maintain its competitive edge.

Q&A

1. **What is the main challenge to Nvidia’s AI supremacy?**

The main challenge to Nvidia’s AI supremacy is the emergence of competitive AI hardware and software solutions from other companies, which are offering alternatives to Nvidia’s dominant GPU technology.

2. **Which companies are posing a threat to Nvidia’s dominance in AI?**

Companies like AMD, Intel, Google, and several startups are developing advanced AI chips and technologies that could potentially rival Nvidia’s offerings.

3. **How is AMD challenging Nvidia in the AI space?**

AMD is challenging Nvidia by developing its own line of GPUs and AI accelerators that are designed to compete in terms of performance and efficiency, often at a lower cost.

4. **What role does software play in the competition against Nvidia?**

Software plays a crucial role as companies are developing optimized AI frameworks and libraries that can run efficiently on non-Nvidia hardware, reducing dependency on Nvidia’s CUDA ecosystem.

5. **How is Google contributing to the challenge against Nvidia?**

Google is contributing by advancing its Tensor Processing Units (TPUs), which are specifically designed for AI workloads and offer an alternative to Nvidia’s GPUs in data centers.

6. **What is the significance of open-source AI frameworks in this competition?**

Open-source AI frameworks, such as TensorFlow and PyTorch, are significant because they provide flexibility and support for various hardware platforms, enabling developers to choose alternatives to Nvidia’s solutions.

7. **What impact could these challenges have on Nvidia’s market position?**

These challenges could lead to increased competition, potentially reducing Nvidia’s market share and forcing the company to innovate further and adjust its pricing strategies to maintain its leadership in the AI industry.

Conclusion

Nvidia’s dominance in the AI hardware market, particularly with its GPUs, faces a significant challenge from emerging competitors and technological shifts. Companies like AMD, Intel, and various startups are developing alternative AI accelerators and specialized chips that promise improved efficiency and performance. Additionally, the rise of open-source software and hardware initiatives, along with advancements in quantum computing and neuromorphic chips, could disrupt Nvidia’s stronghold. As AI workloads diversify and demand for more tailored solutions grows, Nvidia must innovate continuously and adapt to these evolving technologies to maintain its leadership position in the AI industry.